How AI is Changing Online Fraud and What You Need to Know

Artificial Intelligence is reshaping countless industries, but it’s also changing the face of online fraud. Scammers are using AI tools to create fake profiles, deepen social engineering tactics, and mimic voices or writing styles to deceive individuals and businesses alike. The alarming part? These scams are getting harder to spot, making it crucial to stay informed and cautious. As AI continues to evolve, understanding its role in fraud is no longer optional—it’s a necessity. For instance, technologies like machine learning are already aiding fraudsters as detailed in this breakdown of disability fraud schemes. Keep reading to learn more about the risks and steps you can take to protect yourself.

Understanding AI’s Impact on Online Fraud

Artificial Intelligence has revolutionized fraud tactics, intensifying the challenge of recognizing scams. On one hand, it powers tools for identifying suspicious activities; on the other, it fuels more sophisticated attacks. By understanding AI’s dual role in fraud, you can better protect yourself and your data.

AI Algorithms in Fraud Detection

AI algorithms are pivotal in spotting fraudulent activities and predicting risks. Banks, for instance, rely on machine learning models to quickly detect anomalies in spending habits. These tools analyze patterns, flag suspicious transactions, and help block scams in real-time.

Here’s how AI improves fraud detection:

- Behavior Analysis: Algorithms monitor user behavior and identify irregularities, such as multiple failed login attempts.

- Transaction Monitoring: AI can assess vast amounts of financial data, flagging unusual purchases or transfers.

- Facial Recognition: Used during account sign-ups, it helps ensure the individual is who they claim to be.

Yet, no solution is foolproof. Scammers are constantly finding ways to outsmart these systems. To better understand the capabilities and challenges of AI in fraud prevention, check out AI and fraud: opportunities and challenges.

Fraudsters Leveraging AI

Scammers aren’t sitting idly by. They’ve adopted AI to boost their schemes, and with powerful tools at their disposal, they’re succeeding in ways not possible just a few years ago.

Here’s how fraudsters are using AI:

- Deepfakes: These let scammers mimic real voices or appearances. Imagine receiving a video call from your “boss” asking for sensitive files—you may never suspect a thing.

- Phishing Automation: AI generates personalized messages faster, tricking more victims in less time.

- Bot Attacks: Fraudsters use AI-driven bots to scrape data, crack passwords, or flood websites with fake traffic.

A notable example of AI misuse is its role in financial scams, as highlighted in this article on AI-enhanced scams. Tools like chatbots can also mimic human behavior, making fraud feel alarmingly authentic to victims.

With AI acting as both guard and adversary in online fraud, keeping up with its latest developments is vital. Protecting yourself means knowing not only the benefits but also the risks of these technologies.

Types of AI-Driven Online Fraud

As Artificial Intelligence becomes more powerful, it is also increasingly weaponized by fraudsters. From phishing campaigns that feel eerily personal to the chilling realism of deepfakes, AI-driven fraud is a growing threat. Let’s break down the key methods scammers are using AI to deceive and exploit their victims.

Phishing Scams Enhanced by AI

Phishing scams have always been a significant concern, but AI has taken them to a whole new level. Fraudsters can now utilize AI-powered text generators to craft convincing emails or messages that mimic the tone and writing style of trusted organizations or individuals. These messages are no longer riddled with errors or poorly formatted—they look professional, pushing even cautious recipients to click on malicious links.

AI also personalizes phishing attempts by analyzing publicly available information. For example:

- Mining social media profiles to include personal details in messages.

- Mimicking colleagues’ emails with frightening accuracy.

- Creating near-perfect website replicas to steal login credentials.

The stakes are high since AI improves these scams’ believability and scale. To delve deeper into how phishing scams are evolving, you can explore AI-powered phishing techniques explained.

Automated Identity Theft

AI enables the automation of identity theft in ways that were unthinkable a decade ago. Fraudsters use AI tools to scrape personal data from multiple platforms, combining stolen pieces into complete profiles. This creates a chillingly efficient pipeline for stealing identities.

Some advanced tactics include:

- Predicting weak passwords using AI algorithms.

- Deploying bots to access accounts with brute-force attacks.

- Generating synthetic identities—a mix of real and fake data—to bypass traditional verification systems.

The automation aspect means fraudsters can target thousands of victims simultaneously. If you want to understand how they gather this data, check out AI-driven fraud exploitation.

Deepfakes and Their Threat

Deepfakes are among the most unsettling tools scammers use today. They exploit AI-generated imagery and audio to impersonate real people with uncanny accuracy. Imagine a scammer creating a video of a CEO “instructing” an employee to send sensitive company information or money. The results can be devastating.

Deepfake scams often involve:

- Fake videos or voice clips impersonating trusted individuals.

- Altered video calls, making victims believe they’re speaking to someone they know.

- Blackmail schemes using fake but realistic content.

The accessibility of deepfake tools means even small-time scammers can create convincing content. Stay informed about the risks by reading how AI scams are evolving.

Knowing these types of scams is the first step toward protecting yourself. AI isn’t solely a tool for criminals—it’s something we can learn to fight against just as effectively.

Preventive Measures Against AI-Driven Fraud

The rise of AI in online fraud poses a serious challenge for businesses and individuals alike. However, with the right strategies, it’s possible to stay one step ahead. From utilizing the power of AI itself for detection to fostering awareness and addressing the legal landscape, preventive measures can help minimize the risks associated with AI-driven scams.

Implementing AI in Fraud Detection

AI isn’t just a tool for fraudsters—it’s one of the most effective weapons we have against online crime. Businesses are increasingly adopting AI-driven fraud detection tools to quickly analyze vast amounts of data and flag suspicious behaviors. But why is this important, and how can it help you?

AI excels at identifying patterns and anomalies that may go unnoticed by human analysts. Sophisticated algorithms can:

- Monitor real-time transactions and user behaviors, flagging irregular activities.

- Detect fake profiles by analyzing speech, text, or image patterns.

- Predict potential fraud using historical data, allowing preemptive action.

For example, predictive analytics can alert companies about potential attacks before they occur, as explained in this guide on preventing AI-generated fraud. Whether you’re a small business owner or running a large e-commerce platform, integrating AI into your fraud prevention systems is no longer optional—it’s essential.

Education and Awareness

Your strongest line of defense against AI-driven fraud is informed vigilance. Scammers thrive where there is a lack of understanding. Educating both employees and end-users about the risks and warning signs of AI-powered scams is crucial.

What should businesses and individuals focus on?

- Spotting Fake Content: Train users to recognize deepfakes, unusual email requests, and fake websites.

- Phishing Awareness: Help people identify phishing attempts by using examples and updates on new tactics.

- Questioning Authenticity: Encourage skepticism. A quick second-guess can prevent significant losses.

The costs of unawareness can be catastrophic. For individuals, this might mean falling victim to a phishing email generated with uncanny precision. Businesses risk data breaches and financial fraud. For more actionable advice, explore insights on how to handle AI-powered scams in this guide.

Legal and Ethical Considerations

With the rise of AI in fraud prevention, there are significant legal and ethical aspects to consider. Ensuring compliance with data privacy laws and maintaining ethical boundaries while combating fraud should be a top priority.

Key areas to address include:

- Complying with Regulations: Laws like GDPR mandate strict guidelines on data handling in AI systems.

- Transparency in AI Usage: Users have the right to understand how their data is used in fraud detection.

- Balancing Privacy and Security: Fraud prevention measures should not infringe on individual privacy or ethical standards.

By staying up to date with legal requirements and best practices, businesses can focus on protecting their customers without crossing ethical lines. You can explore strategies for safeguarding data and managing AI ethically in this article on AI fraud detection.

Building a multi-layered defense system that combines technology, education, and legal compliance ensures resilience against even the most sophisticated AI-driven fraud attempts. As threats evolve, our preventive measures must adapt and strengthen, too.

Case Studies of AI in Online Fraud

Artificial Intelligence isn’t just reshaping industries; it’s also enabling more sophisticated online fraud tactics. Scammers are now integrating AI into their schemes, making these activities harder to detect. This section explores real-world examples of how AI has been weaponized for online fraud, starting with a focus on refund scams.

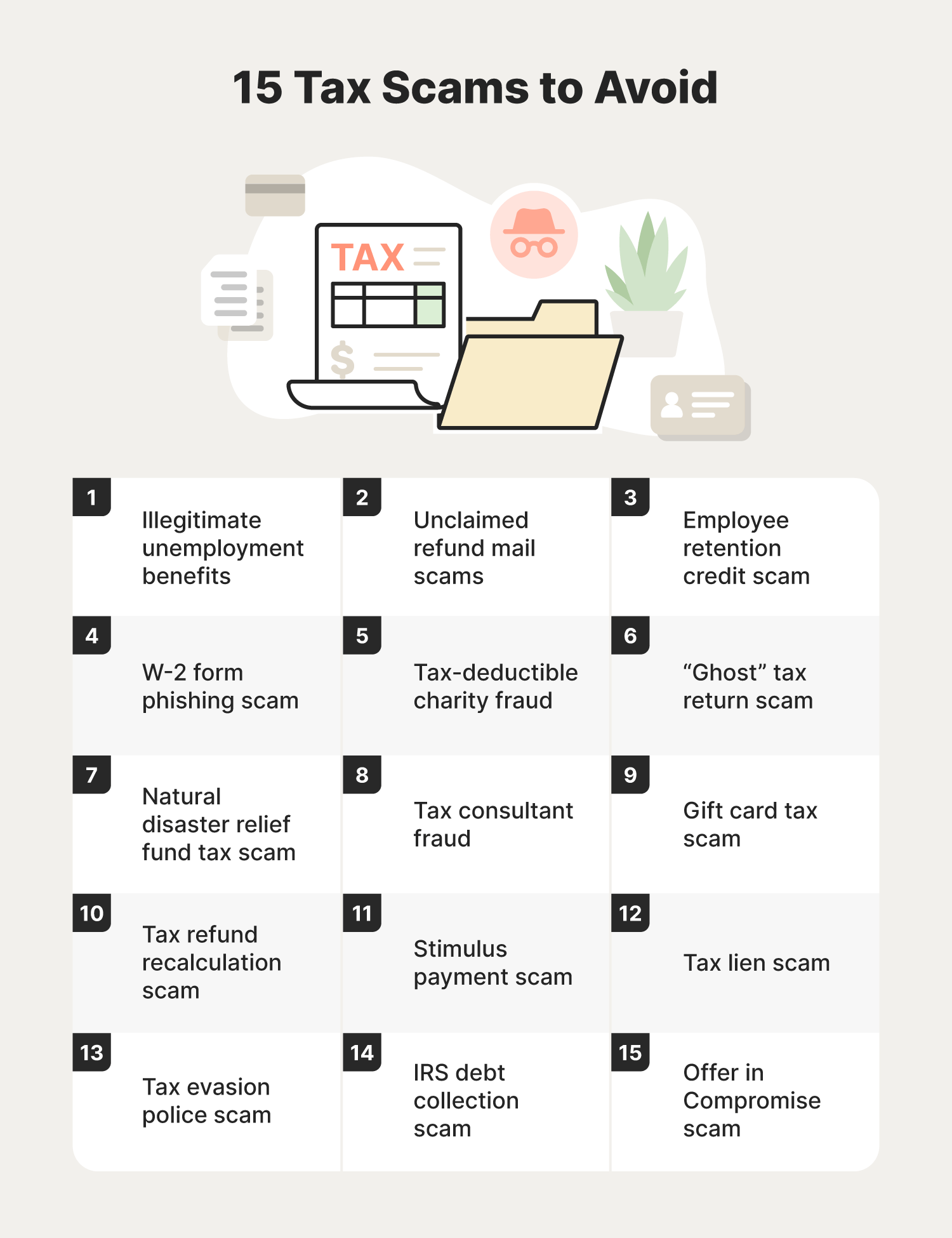

Refund Scams and AI

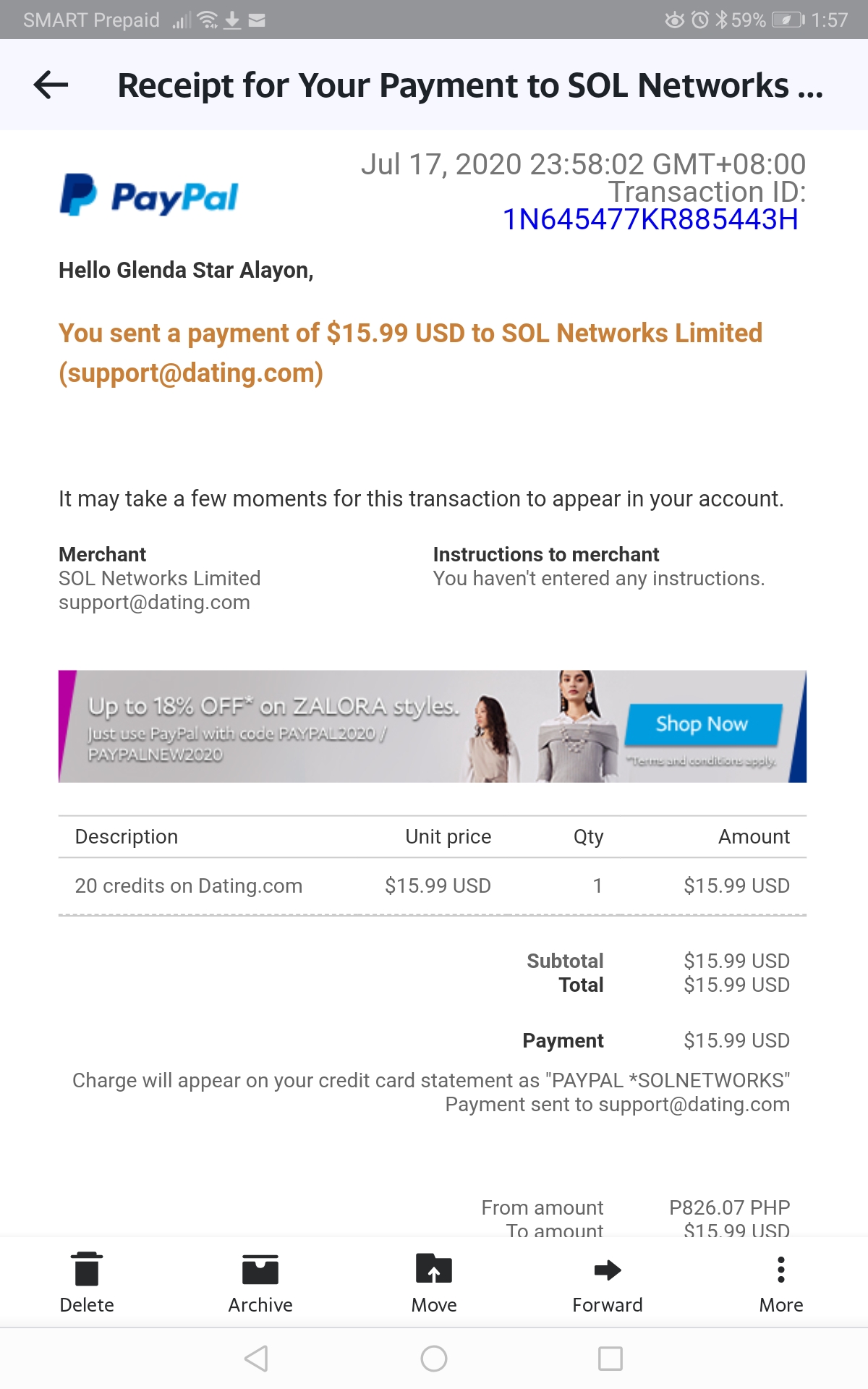

Refund scams have been a longstanding issue, but AI has taken them to a troubling new level. Fraudsters now deploy AI tools to streamline everything from generating fake receipts to impersonating victims or customer service agents. These scams often involve highly convincing interactions that manipulate businesses into issuing unwarranted refunds.

Here’s a glimpse into how AI amplifies refund scams:

- Impersonation: AI-powered voice cloning aids fraudsters in pretending to be customers, convincingly demanding refunds without legitimate claims.

- Document Fabrication: Scammers use AI tools to create fake purchase receipts or transaction records that closely mimic real ones.

- E-commerce Exploitation: Fraudsters game return systems on platforms like Amazon or eBay, using AI for endless variations of claims to bypass detection.

A detailed dive into how refund fraud schemes operate can be found in this report. For instance, the use of AI-generated fake identities has played a role in the scams associated with websites like Easyreturn.info and RefundFX.eu. These scams exploit retailers’ vulnerabilities by mimicking customer complaints with alarming accuracy.

Moreover, AI also helps automate these operations at scale, allowing fraudsters to target multiple businesses simultaneously. For example, high-profile incidents highlight AI’s role in replica scams aimed at tax refunds. Cybercriminals use lifelike, AI-generated imagery or convincing forged documents to hijack rightful claims. Read more about this phenomenon in this article.

Refund scams powered by AI don’t just steal money—they erode trust. These scams create a significant challenge for e-commerce platforms and online retailers who now find themselves on high alert to prevent losses.

Conclusion

The rise of AI in online fraud highlights the need for constant vigilance and awareness. Scammers are using these technologies to outsmart traditional defenses, making education and proactive measures more essential than ever.

By understanding how AI-driven scams work and staying informed, you can significantly reduce your risk. Share this knowledge with others and encourage conversations about staying safe.

These threats evolve quickly, so staying one step ahead is critical. Keep learning and exploring ways to strengthen your defenses.